Test Models

Use the Model Testing tools to test models and review test results.

- Mean Absolute Error (MAE)

- The MAE metric is a valuable metric to assess the quality of machine learning model predictions because the metric is easily interpreted. MAE is in the units of the predicted quantity, so it means that (on average) any given prediction is off by this amount of error.

clear all

clc

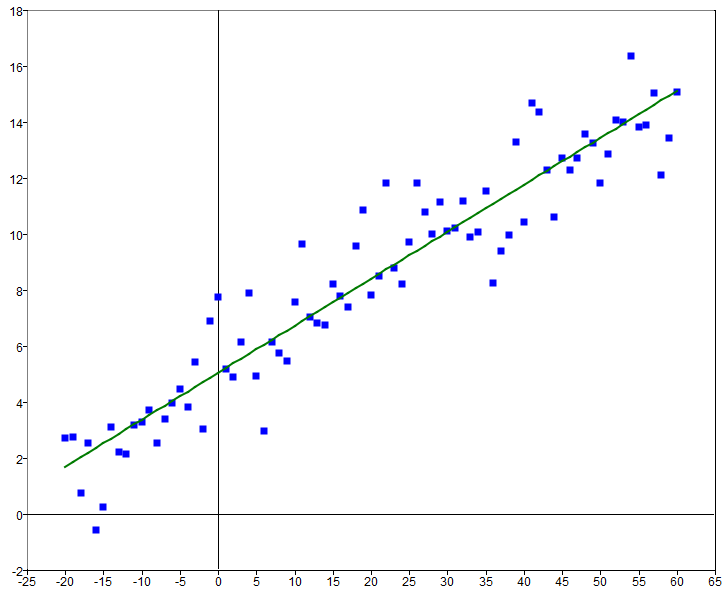

A= 5/30;

B= 5;

d = 8.235;

x = -20:1:60;

rand('seed',4);

y = A*x + B + d*rand(size(x)).*(rand(size(x))-0.5);

yl = A*x + B;

function yf = func(p,x)

yf = p(1)*x + p(2);

end

p = lsqcurvefit(@func,[A,B],x,y)

yl2= p(1)*x + p(2);

scatter(x,y);

line(x,yl2);

MAE=mean(abs(yl2-y))-

From the PhysicsAI ribbon, select the Test

Machine Learning Model tool.

Figure 2.

The Test Model dialog opens. -

Select a model and Dataset.

Figure 3.

-

Click OK.

The Model Testing dialog opens.Tip: You can also open the Model Testing dialog by selecting the Review Test Results tool.

Figure 4.

- Click Detailed Report to open the View Score Report.

- Click Show Log to view the testing logs.

-

In the Model Testing dialog, select a Run ID and click

Display File to view the results in the modeling window.

Note: When comparing the results between predicted and true, the legend segments are synchronized to ensure the color bands are common in both legends. The legends' extremum are unique for each contour to reflect each contour’s extreme values. An exception to this rule occurs when the magnitude of a predicted extremum falls below the penultimate value – in which case the extremum value will be the same as the true.

- Close the Model Testing dialog.