Transfer Learning in PhysicsAI

Tutorial Level: Intermediate In this tutorial, you will be guided through the process of using transfer learning in PhysicsAI with a provided pre-trained model and datasets by taking two routes: using transfer learning and training a model from scratch.

Transfer learning in PhysicsAI enables the adaptation of a pre-trained model to a new objective by leveraging the weights from an existing model. This approach is highly effective when dealing with similar tasks or datasets, significantly reducing training time and improving model performance. Transfer learning involves shifting the objective to optimize new but similar datasets quickly by leveraging pre-existing knowledge. It serves as a benchmark for reaching optimization more efficiently.

The datasets used in this tutorial were generated by the Ohio State University Digital Design and Manufacturing Lab. You can see the data visualization here.

Use the Pre-Trained Model

- Open HyperMesh.

- From the menu bar, click to open the PhysicsAI ribbon.

-

Create a project.

-

Import the model.

-

Create datasets.

-

Perform transfer learning.

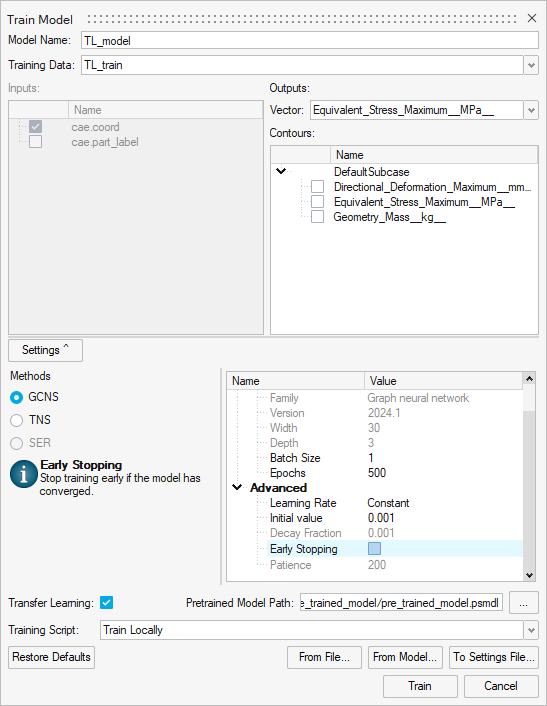

Figure 5.

The Model Training dialog opens.Figure 6.

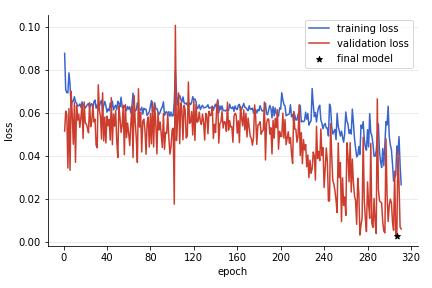

Conduct Training from Scratch

With the new dataset already imported, follow these steps to train a model from scratch. Training from scratch means starting the model training without any pre-existing weights, allowing the model to learn from the data provided from the beginning.

-

Train model.

-

Train model.

Results and Conclusion

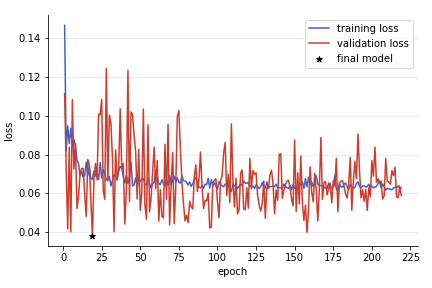

The transfer learning process significantly improves model accuracy compared to using the pre-trained model without transfer learning.

Initially, the pre-trained model may not predict outcomes accurately for the new dataset. However, after applying transfer learning, the model's predictions become much closer to the actual values. For instance, testing the pre-trained model on the TL_test dataset resulted in an MAE (Mean Absolute Error) of 16.639, while the TL_model achieved an MAE of 6.889, reflecting a 45% improvement.

Moreover, when comparing the transfer learning model to a model trained from scratch on the same smaller subset of data for an equal duration, the transfer learning model demonstrated a 35% enhancement in performance on average. This underscores the efficiency of transfer learning in utilizing pre-existing knowledge to boost performance on new but similar tasks.

Overall, transfer learning is advantageous as it facilitates faster convergence and superior performance without the need to start from scratch.

Comparison of Mean Absolute Error (MAE)

| Model | MAE (Pre-Trained) | MAE (Transfer Learning) | MAE (From Scratch) |

|---|---|---|---|

| MAE Comparison | 16.639 | 6.889 | 8.095 |